Eight years after its release, ByteDance’s social media app, TikTok, has been banned in sixteen countries across Europe, Asia, and Oceania. The company faces lawsuits for suicides and privacy violations, and it has spent billions in legal fees. With a looming U.S. ban in April 2025 unless sold to American owners, we must ask: is TikTok unethical?

Like other social media platforms, TikTok collects extensive user data to personalize content, enhancing user experience and fostering engagement. Metrics like likes, shares, and video duration feed TikTok’s algorithm. RE’s machine learning teacher, Dr. David Nunez Garcia, describes it as a “neural network” that learns from data to replicate user patterns.

This personalization fosters communities, such as “BookTok” and mental health awareness groups, providing solidarity and spreading awareness. The hashtag “#anxiety,” for example, has over 1.4 billion views, normalizing mental health discussions. Many creators post videos guiding people through struggles like eating disorders, with positive affirmations often in the comments. For many, these communities are pivotal in offering comfort in an overwhelming world.

TikTok also amplified marginalized voices and progressive movements. The #StopAsianHate movement raised awareness about racism during the COVID-19 pandemic, and trends like “underconsumption core” and “deinfluencing” promote sustainable habits. These examples highlight TikTok’s potential to inspire positive societal change.

But this influence comes at a cost. L

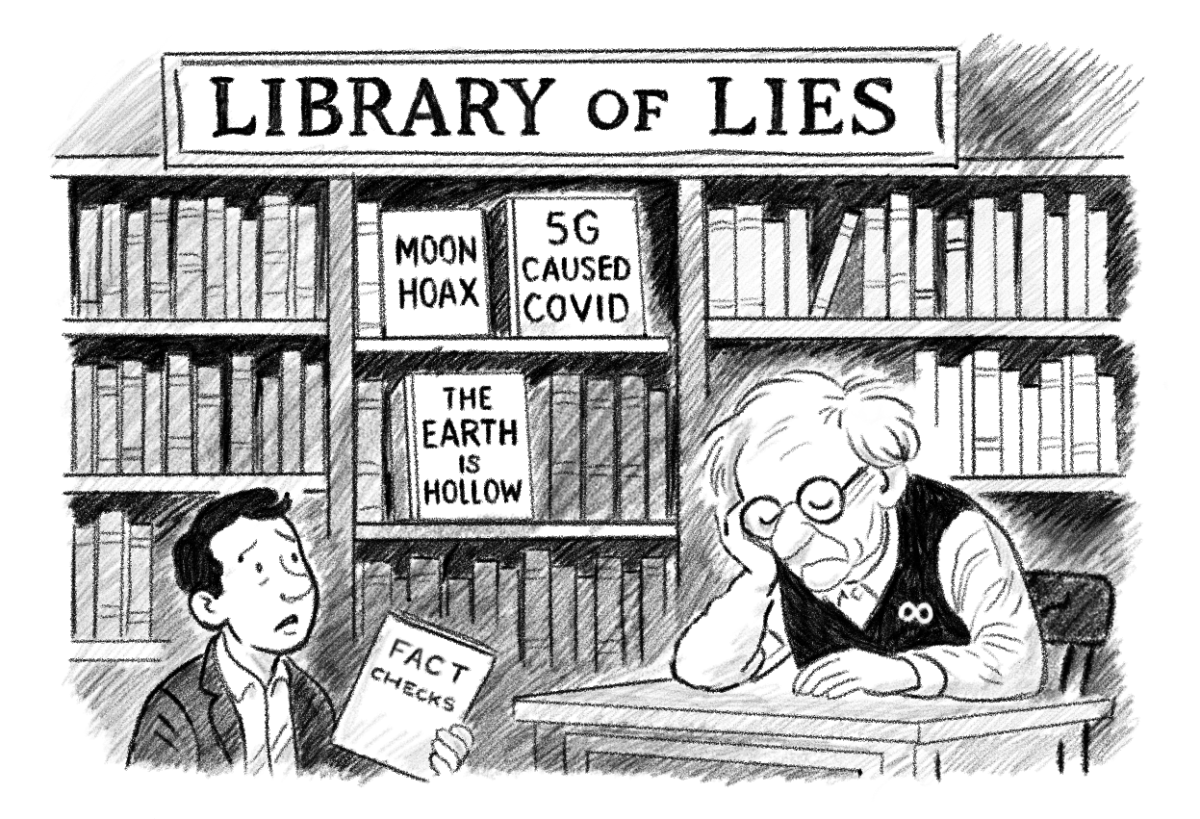

Tik Tok’s data collection threatens privacy rights. Accepting its terms grants access to extensive data, hidden deep in a confusing privacy policy. Beyond tracking camera and microphone use, the app collects metadata — revealing location and time of videos—payment information, keystroke patterns, IP addresses and identifiable landmarks. It even captures biometric identifiers like fingerprints and facial recognition, ostensibly for filers but also for vague “safety” and “analytics purposes. TikTok’s policy allows sharing this sensitive data across ByteDance’s corporate group, which has ties to the Chinese government. In 2022, ByteDance admitted that its China-based employees used TikTok geolocation data to track American journalists.

Although the app’s content algorithm has helped some users cope with mental health issues, it has also hurt others. Trends like “Jawline checks” and beauty filters that reinforce Eurocentric beauty standards. #whatieatinaday” glorifies disordered eating- suggesting a bowl of ice and half of a cucumber as a good lunch- while mental health content often romanticizes depression and self-harm. Vulnerable users cannot control their feeds making harmful content inescapable. Even positive trends can exacerbate existing issues.

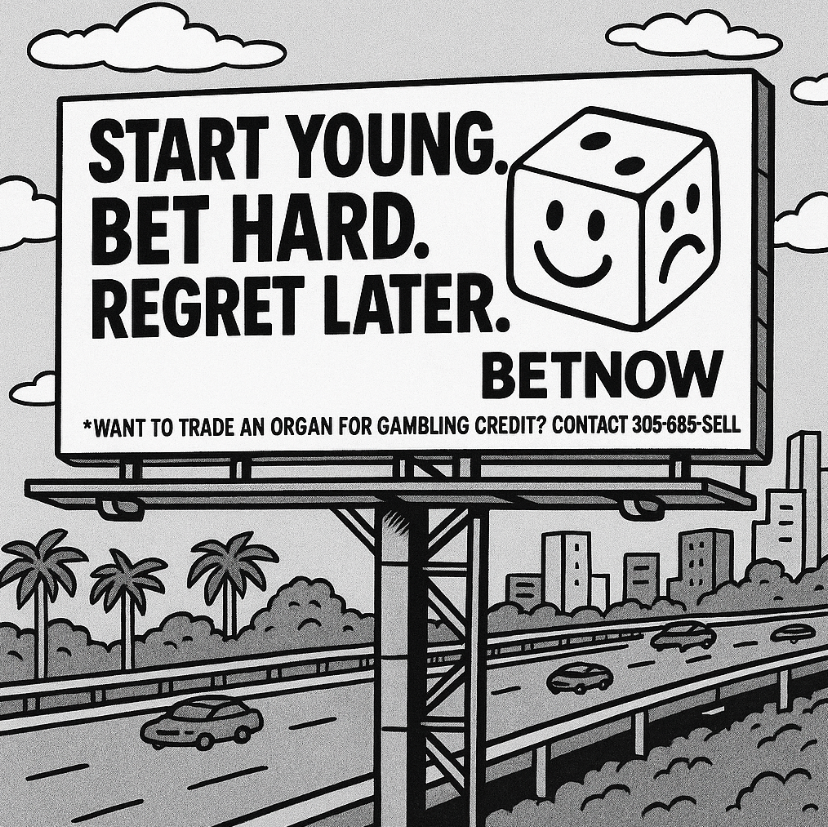

The app’s short-form content fosters addiction, as it delivers dopamine hits with every video. Internal research revealed it takes just 35 minutes of scrolling to create a habit, and that app reminders to curb usage are ineffective. James Pfleger ‘27, admitted he had spent “eight hours” on TikTok in a single day.

TikTok’s dual nature – supporting marginalized voices while amplifying harmful content – reveals its core contradiction. Progressive trends like #StopAsianHate coexist with commodified aesthetics of serious issues. TikTok profits from engagement-driven trends, often at the expense of user well-being. The platform’s prioritization of growth and profit undermines its potential for genuine positive impact.

Dr. John King, Head of the RE Holzman Center of Applied Ethics, “Are they doing something illegal? Probably not. Are they doing something unethical? Totally.”

Despite the communities that might have formed along the way, TikTok lack of content moderation tells us that they let their desire for higher profits outweigh their ethical obligation to not harm or threaten society.

The app must be held accountable for the moral implications of its practices. Users, parents, and policymakers should reflect on whether we can accept a platform that thrives on privacy violations, harmful content, and addictive design.

While one could argue that we cannot blame TikTok for the content posted on it, the platform still has responsibility for ensuring user safety. Yes, free speech is a fundamental right, but it must coexist with reasonable regulations, particularly on a platform dominated by so many young, vulnerable people. Harmful content and trends can spread unchecked without moderation.

TikTok has the tools to regulate content, but it often fails to enforce meaningful safeguards. The platform bans explicit hate speech or violence, but its enforcement is inconsistent, and problematic trends persist.

The app must implement stricter policies to remove harmful content and provide transparent, user-friendly explanations of their data collection. It also needs to limit the autoplay feature of the videos to decrease the addictive nature of the app.

Until TikTok addresses and implements solutions for these concerns, it will remain an unethical platform.